My previous article talked about the issues with Sitecore blobs and how the databases can grow out of control. Whilst there is a Sitecore feature to delete orphaned blobs, there is no recommended way to automate this. Depending on how you are hosting your databases, you will have different options. I am going to talk about it in relation to Azure SQL hosted databases, as this is more common with Sitecore solutions these days.

Azure SQL does not have any direct features for scheduling the run of a SQL script/stored procedure. To do this you need to utilise other Azure features. The most suitable candidates are:

- Azure Automation & Runbooks

- Function Apps

- Logic Apps

Any of these can achieve the goal but I needed to identify which is the most appropriate? It will depend on your situation. For the solution I am adding this to, we have Azure SQL databases which have IP whitelisting for the specific Web Apps so they can consume the databases. We also have “Azure Services” disabled for security reasons. Whatever solution we adopt, it must be possible to whitelist on the database firewall without opening up unnecessarily to other Azure services that we do not control. Whilst the Web Apps are not in an Isolated tier, due to cost, we still want to limit the number of IP addresses that can attempt to connect to the Azure SQL databases.

Azure Automation & Runbooks

This feels like a good fit, as it is designed for automating the running of tasks like this. It is possible to have it run as part of a private network but does require additional set up to allow for this. This article appears to do what we need with exception of running as part of a private network. If you do not have that requirement, this may be a good way to go. As we were not utilising Azure Automation already for this project I didn’t want all the additional set up for this simple task. The Function Apps and Logic Apps provide a leaner approach.

Function Apps and Logic Apps

Function Apps and Logic Apps are two other serverless solutions which by default, also do not have a private IP address on which to whitelist. There are ways to achieve this to effectively bring the Function App or Logic App within your private network. There are other articles on this so I won’t go into that detail. Check out:

- https://jordanbeandev.com/how-to-control-the-outbound-ip-address-of-a-logic-app-standard/

- https://www.middleway.eu/fixed-function-app-outbound-ip-address-via-vnet/

But once we have set up either of these so that they have fixed outbound IP address, we can whitelist the IP address and run the task on a schedule. Again, this is a perfectly useable solution, but during my reading I discovered that you can create Function Apps by utilising an existing App Service Plan.

As our solution is a traditional Sitecore XM set up hosted on Azure Web Apps, we already have a host of App Service Plans in place. Using the CM instance to also host a Function App feels like a low effort approach, which also has the benefit of not adding any cost for compute. I pursued this as an approach and this article demonstrates the steps required to make this happen.

The walkthrough

The first decision I was faced with was the approach for connecting to the database. With Azure Function Apps you can use a managed identity, which allows the app to connect without the need to store connection string credentials. This is the more secure approach but requires access to the AD to configure. Due to limitations we were not able to take this approach and instead needed to rely on a connection string.

Following the least privilege security methodology, I created a dedicated SQL user in the Master and Web databases which only have permission to execute a single stored procedure. To do this I first created the stored procedure:

CREATE PROCEDURE spr_CleanupBlobs

AS

DECLARE @r INT;

DECLARE @batchsize INT;

create table #UnusedBlobIDs (

ID UNIQUEIDENTIFIER PRIMARY KEY (ID)

);

SET @r = 1;

SET @batchsize=1000;

WITH [ExistingBlobs] ([BlobId])

AS

(SELECT [Blobs].[BlobId]

FROM [Blobs]

JOIN [SharedFields]

ON '{' + CONVERT(NVARCHAR(MAX), [Blobs].[BlobId]) + '}' = [SharedFields].[Value]

UNION

SELECT [Blobs].[BlobId]

FROM [Blobs]

JOIN [SharedFields]

ON CONVERT(NVARCHAR(MAX), [Blobs].[BlobId]) = [SharedFields].[Value]

UNION

SELECT [Blobs].[BlobId]

FROM [Blobs]

JOIN [VersionedFields]

ON '{' + CONVERT(NVARCHAR(MAX), [Blobs].[BlobId]) + '}' = [VersionedFields].[Value]

UNION

SELECT [Blobs].[BlobId]

FROM [Blobs]

JOIN [VersionedFields]

ON CONVERT(NVARCHAR(MAX), [Blobs].[BlobId]) = [VersionedFields].[Value]

UNION

SELECT [Blobs].[BlobId]

FROM [Blobs]

JOIN [UnversionedFields]

ON '{' + CONVERT(NVARCHAR(MAX), [Blobs].[BlobId]) + '}' = [UnversionedFields].[Value]

UNION

SELECT [Blobs].[BlobId]

FROM [Blobs]

JOIN [UnversionedFields]

ON CONVERT(NVARCHAR(MAX), [Blobs].[BlobId]) = [UnversionedFields].[Value]

UNION

SELECT [Blobs].[BlobId]

FROM [Blobs]

JOIN [ArchivedFields]

ON '{' + CONVERT(NVARCHAR(MAX), [Blobs].[BlobId]) + '}' = [ArchivedFields].[Value]

UNION

SELECT [Blobs].[BlobId]

FROM [Blobs]

JOIN [ArchivedFields]

ON CONVERT(NVARCHAR(MAX), [Blobs].[BlobId]) = [ArchivedFields].[Value])

INSERT INTO #UnusedBlobIDs (ID) SELECT DISTINCT [Blobs].[BlobId]

FROM [Blobs]

WHERE NOT EXISTS

( SELECT NULL

FROM [ExistingBlobs]

WHERE [ExistingBlobs].[BlobId] = [Blobs].[BlobId])

WHILE @r > 0

BEGIN

BEGIN TRANSACTION;

DELETE TOP (@batchsize) FROM [Blobs] where [Blobs].[BlobId] IN (SELECT ID from #UnusedBlobIDs);

SET @r = @@ROWCOUNT;

COMMIT TRANSACTION;

END

DROP TABLE #UnusedBlobIDs;

Then created the users:

CREATE USER blobcleanupuser WITH PASSWORD = 'xxPasswordxx';

GO

GRANT EXECUTE ON OBJECT::spr_CleanupBlobs TO blobcleanupuser;

GO

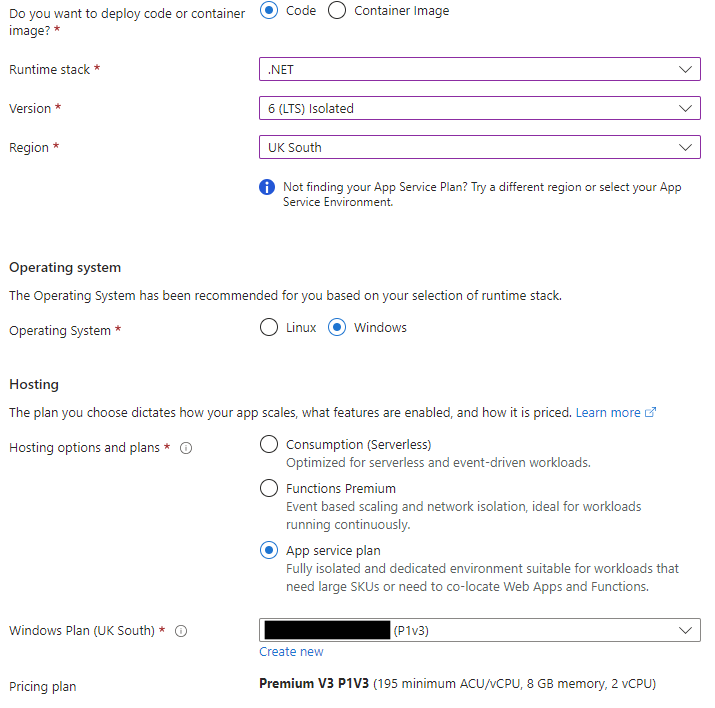

Next, I needed to create the Function App. The creating of the Function App itself is simple point and click in the Azure Portal. The key things I needed were:

- Set the stack and subsequently the existing App Service Plan.

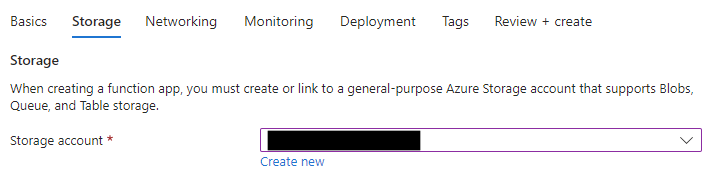

- Specify an existing Storage Account (you can create a new one if you don’t have one already).

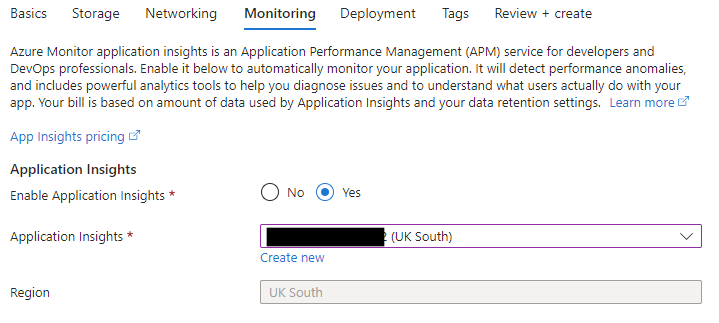

- App Insights – I linked this to an existing instance.

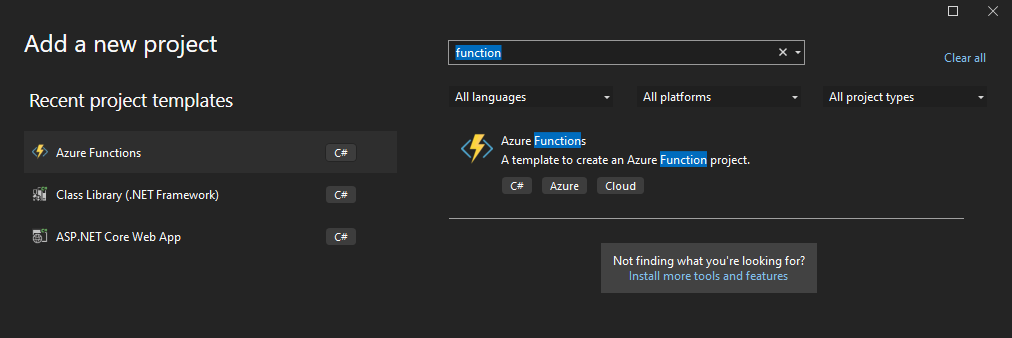

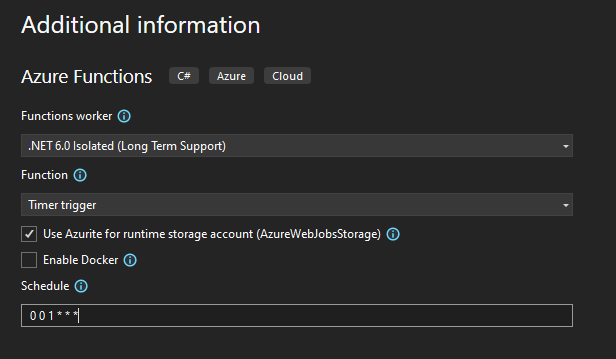

Once the Function App was provisioned I needed to get to work on writing the function. To do this I used Visual Studio. First I created a new Project in my existing Sitecore solution.

The project gives you a starter function, “Function1”. I renamed to BlobCleanup and added the c# code to connect to the Azure SQL database and run the stored procedure.

[Function("BlobCleanup")]

public void Run([TimerTrigger("0 0 1 * * *")] MyInfo myTimer)

{

_logger.LogInformation($"BlobCleanup executed at: {DateTime.Now}");

string connectionString = "Server=tcp:<server>.database.windows.net,1433;Initial Catalog=<database-name>;Persist Security Info=False;User ID=blobcleanupuser;Password=<password>;MultipleActiveResultSets=False;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30;";

try

{

using (SqlConnection connection = new SqlConnection(connectionString))

{

connection.Open();

SqlCommand command = new SqlCommand("spr_CleanupBlobs", connection);

command.CommandType = System.Data.CommandType.StoredProcedure;

command.CommandTimeout = 2 * 60 * 60; //2 hours

using (SqlDataReader reader = command.ExecuteReader())

{

if (reader.Read())

{

_logger.LogInformation($"BlobCleanup: Successfully processed at: {DateTime.Now}");

}

}

}

}

catch (Exception ex)

{

_logger.LogError("BlobCleanup Error", ex);

}

_logger.LogInformation($"BlobCleanup: Next timer schedule at: {myTimer.ScheduleStatus.Next}");

}

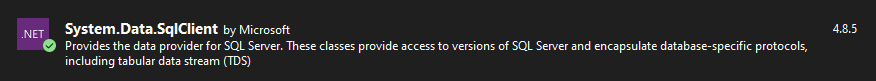

To resolve the SQL references you need to add the System.Data.SqlClient nuget package.

In the code above, the schedule is defined in the method declaration TimerTrigger("0 0 1 * * *"). This means the function will run at 1am every day. The value is a NCRONTAB expression.

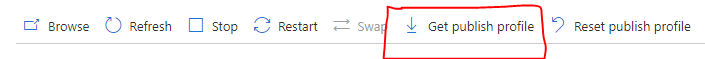

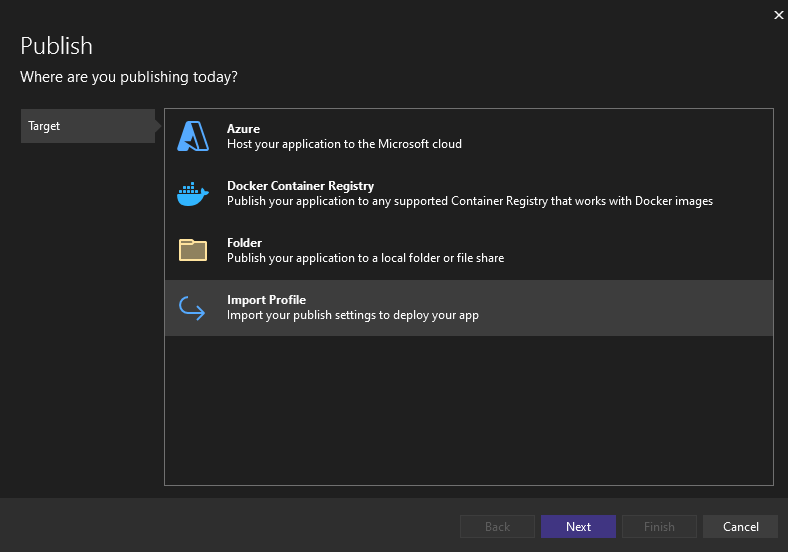

To deploy the function app code to Azure I published in VS by choosing the Import Profile option. You can download a profile from the Azure Portal on the Function App.

You will want to instead hook this up to your usual deployment pipeline/release process (e.g. via Azure DevOps), but the approach above is just to get something working and tested.

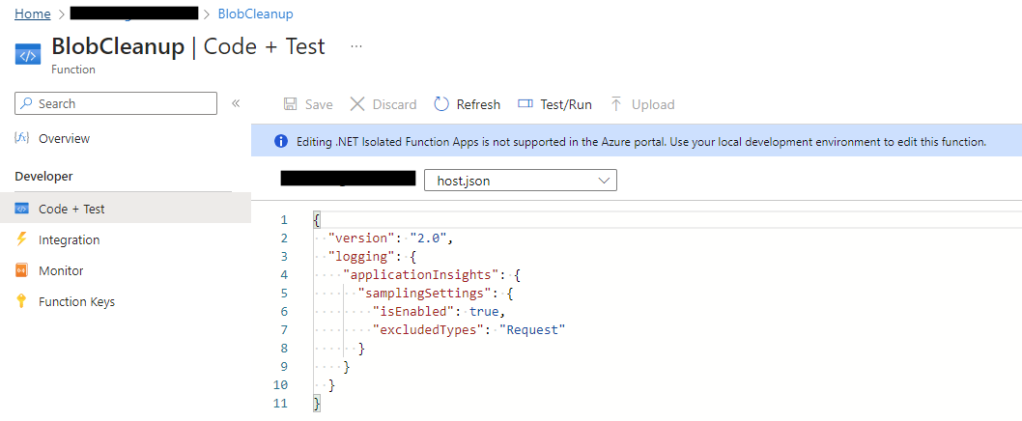

Once the code is published to Azure you can run a test by going to the specific function and navigating to the Code and Test section, and clicking “Test/Run”.

Timeout

After attempting to run the first time I hit a timeout exception. As the SQL query has the potential to run for a long time, I extended the timeout to 2 hours. This is done by adding the functionTimeout setting to the host.json file.

{

"version": "2.0",

"logging": {

"applicationInsights": {

"samplingSettings": {

"isEnabled": true,

"excludedTypes": "Request"

}

}

},

"functionTimeout": "02:00:00"

}

It is important to note there are a few different timeouts that could occur. The functionTimeout setting above is the maximum duration hat the Function App can take to complete. But as we are running a very demanding SQL command, the SQL execution timeout needs to also be sufficient. This can be seen in the c# code – command.CommandTimeout. You should make sure that these two timeout values are suitable for your situation. If you have no orphaned blobs when setting up this recurring task, I would generally expect the query to complete in less than 30 minutes if run daily. However, this depends on the level of resources the SQL database has and the amount of orphaned blobs you create in 24 hours. When I hit the default timeout of 30 minutes mentioned above, I had 2 weeks of orphaned blobs, so it may be possible to leave the functionTimeout default and just set the SQL execution timeout to 30 minutes.

Another important note is that there are limits on the timeout of the Function App if not running under an App Service Plan. See this article.

Credentials

In the example I have provided, the SQL connection string is in the codebase. This is obviously not best practice. There are options to make this more secure. As mentioned earlier, you could use a Managed Identity if you have access to the AD to set this up. Alternatively you may be able to store the connection string in an Azure Key Vault and have the Function App access the secret at runtime or deploy time. For my situation, we already had Web Apps set up to read the connection strings from an Azure Key Vault. A couple of simple modifications removes the need to store the credentials in the Function App.

You can update your c# code to get the connection string from the Function Ap’s app settings:

var config = new ConfigurationBuilder()

.AddEnvironmentVariables()

.Build();

var masterConnectionString = config.GetConnectionString("MasterConnection");

var webConnectionString = config.GetConnectionString("WebConnection");

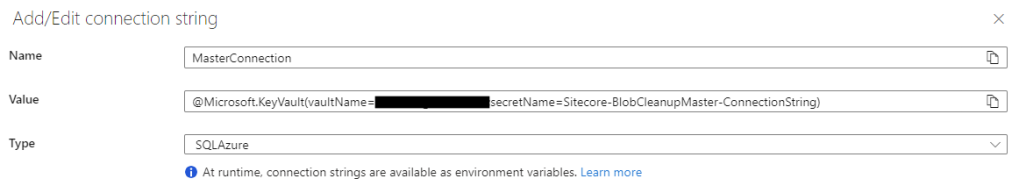

In the connection string you set the value to be a reference to a Key Vault secret. e.g.

@Microsoft.KeyVault(vaultName=<keyvault-name>;secretName=Sitecore-BlobCleanupMaster-ConnectionString)

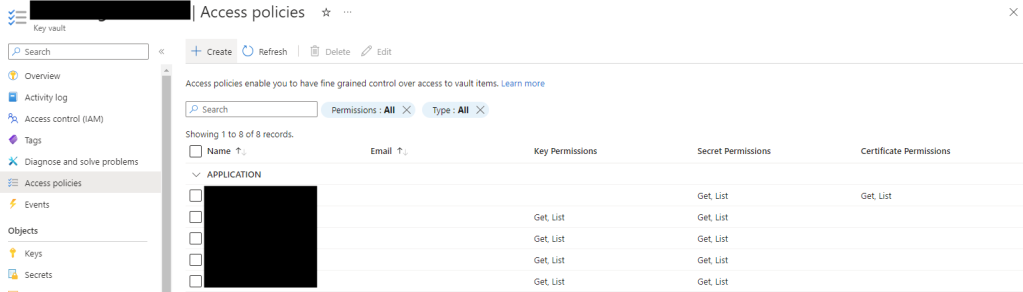

You must grant Get / List access to the Key Vault for the Function App identity.

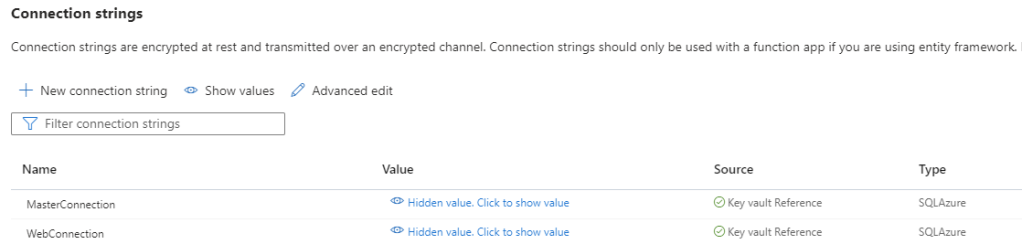

If everything is configured correctly you will get a green tick against the connection string in the Function App:

Bicep / ARM Gotcha

Whilst it is beyond the scope of this article, I thought it worth mentioning a gotcha about Function Apps and infrastructure as code. I integrated this set up into our Bicep templates so that the Function App was provisioned automatically, linked to existing Application Insights and Storage Accounts, and the function code deployed via an Azure Devops pipeline. An issue I came up against was that the deployed function was lost when redeploying the Bicep template. Normally, when you redeploy a Web App, it only updates changed items such as settings. However, there is an Application Setting you must provide to avoid losing your functions.

{

name: 'WEBSITE_RUN_FROM_PACKAGE'

value: '1'

}

Thanks to this stack for the solution.

The Result

So we have set up a task that clears out orphaned blobs on a daily basis. On this project specifically, that is a big win as it generates an obscene amount of orphaned blobs every day.

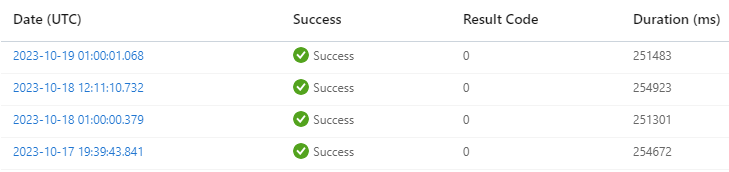

We can see in the Azure Portal that the task is running successfully, and we can see that it takes around 4 or 5 minutes to run. It is important to note that the DB is at 100% DTU utilisation during this time. If this is a problem for you, you could consider modifying the process so that the DB is scaled up before running and then back down again after completion. Many solutions may also only require the task to run monthly to keep things under control.

Conclusion

There are many ways you can automate cleanup of your orphaned blobs in a cloud SQL environment. Using a Function App on an existing App Service Plan is just one of them but one that fit nicely with our existing set up. For us, it incurred no additional cost and piggybacked off existing IP whitelisting in the SQL firewall. If you have any thoughts about how this could be done better or easier, or if this helped you with your blob nightmare, leave a comment.

One thought on “Automating deletion of orphaned Sitecore blobs”